产品概述

描述

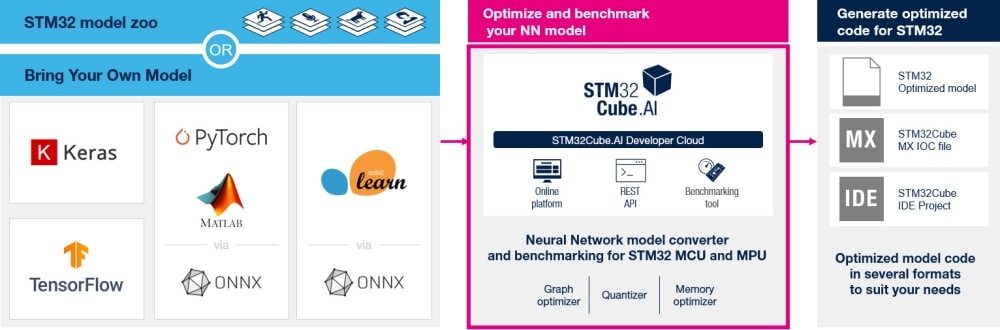

STM32Cube.AI Developer Cloud (STM32CubeAI-DC) is a free-of-charge online platform and services to create, optimize, benchmark, and generate artificial intelligence (AI) for the STM32 microcontrollers and microprocessors based on the Arm® Cortex® processors. It can leverage AI hardware acceleration (neural processing unit, NPU) whenever available in the target hardware.

STM32CubeAI-DC uses the ST edge AI core technology, which is STMicroelectronics technology to optimize NN models for any STMicroelectronics products with AI capabilities. For STM32 MCUs, its performance is identical to the X-CUBE-AI Expansion Package used with STM32CubeMX. Find STM32Cube.AI Developer Cloud at stm32ai-cs.st.com.

-

所有功能

- Online GUI (no installation required) accessible with STMicroelectronics extranet user credentials

- Network optimization and visualization prodiving the RAM and flash memory sizes needed to run on the STM32 target

- Quantization tool to convert a floating-point model into an integer model

- Benchmark service on the STMicroelectronics hosted board farm including various STM32 boards to make the most suited hardware selection

- Code generator including the network C code and optionally the whole STM32 project

- STM32 model zoo:

- Easy access to model selection, training script, and key model metrics, directly available for benchmark

- Application code generator from the user’s model with “Getting started” code examples

- ML workflow automation service with Python™ scripts (REST API)

- For STM32 MCUs, supports all the X-CUBE-AI features, such as:

- Native support for various deep learning frameworks such as Keras and TensorFlow™ Lite, and support for all frameworks that can export to the ONNX standard format such as PyTorch™, MATLAB®, and more

- Support for the 8-bit quantization of Keras networks and TensorFlow™ Lite quantized networks

- Support for various built-in scikit-learn models such as isolation forest, support vector machine (SVM), K-means, and more

- Possibility to use larger networks by storing weights in external flash memory and activation buffers in external RAM

- Easy portability across different STM32 microcontroller series

- Supports all STM32 MPU series with the following features:

- Native support for various deep learning frameworks such as Keras and TensorFlow™ Lite, and support for all frameworks that can export to the ONNX standard format such as PyTorch™, MATLAB®, and more

- Support for the 8-bit quantization of Keras networks and TensorFlow™ Lite quantized networks

- Support for various built-in scikit-learn models such as isolation forest, support vector machine, K-means, and more

- CPU inference via TensorFlow™ Lite runtime or ONNX Runtime for the STM32 microprocessors that do not support AI hardware acceleration

- NPU/GPU inference processing for the STM32 microprocessors that support AI hardware acceleration

- User-friendly license terms

获取软件

精选 视频

本教程将向您介绍全新STM32Cube.AI开发者云软件工具及云基础设施服务。学习基础知识,继而尝试通过简洁有效的全新方法开发神经网络,并将其部署到STM32微控制器上。